Custom Robots.txt Generator for Blogger – Have you created a blog on Blogger and are wondering how to rank your content on Google Discover? Or do you want to guide search engines to crawl important pages of your site? Well brother, robots.txt is the only file that can give you control! In this article we will talk about a custom robots.txt generator for Blogger, and how it can optimize your blog. This guide is in Hinglish, absolutely simple and human-toned, which is not only easy to read, but is also perfect for Google Discover and SEO.

Table of Contents

What is Robots.txt and why is it important?

Custom Robots.txt Generator for Blogger – Robots.txt is a small text file located in the root directory of your blog. This file tells search engine crawlers (such as Googlebot) which pages or sections of your site to scan and which to skip. In the case of bloggers, this file gives you control over which pages appear in Google search results or the Discover feed.

Also Read This – Motorola Edge 40 Neo 5G: A Budget-Friendly Smartphone That Will Win Your Heart

Also Read This – How To Apply For Pradhan Mantri Awas Yojana: प्रधानमंत्री आवास योजना के लिए आसानी से आवेदन करें 2025

Also Read This – How to Launch A Blog In India In 2025 | ब्लॉगिंग की शुरुआत आसान तरीके से अपना ब्लॉग शुरू करें!

Why is it important?

- Saves crawl budget: Google has limited time to scan your site. Robots.txt ensures that only important pages are crawled.

- Maintains privacy: If you want some pages (like admin pages) to remain private, robots.txt can block them.

- Boosts SEO: By blocking duplicate content or unnecessary pages, it improves the ranking of your blog.

- Now how to create custom robots.txt for Blogger? Let’s see step-by-step!

Custom Robots.txt Generator for Blogger: How to use it?

Manually editing a robots.txt file in Blogger can be a little tricky, but don’t worry! With online tools and simple steps, you can create the perfect robots.txt file. A custom robots.txt generator gives you the flexibility to set rules according to your blog’s specific needs. Custom Robots.txt Generator for Blogger

Step 1: Understand your blog goals

- First, decide what you want:

- Do you want Google to crawl the entire blog, or block specific pages?

- Does your blog have duplicate content (such as archive pages) to avoid?

- Do you want to optimize for Google Discover?

Step 2: Choose a Trusted Robots.txt Generator Tool

- There are many free tools online that can generate a custom robots.txt file for bloggers. Some popular options are:

- SEOptimer Robots.txt Generator: Simple and beginner-friendly.

- Ryte Robots.txt Tool: Detailed and for advanced users.

- XML-Sitemaps.com: Can create robots.txt along with sitemap.

- These tools ask you for basic information, such as:

- URL of your site.

- Which pages to allow or disallow.

- Rules for specific crawlers (such as Googlebot, Bingbot).

Step 3: Understand the Default Robots.txt for Blogger

Blogger automatically provides a default robots.txt that looks something like this:

User-agent: *

Disallow: /search

Allow: /

This is basic, but lacks customization. If you want to optimize for Google Discover, you’ll need to tweak it.

Step 4: Add Custom Rules

- In a custom robots.txt file, you can include the following:

- Block unnecessary pages: Block archive pages or labels.

- Allow important pages: Ensure that your blog posts and static pages are crawled.

- Add a sitemap: Add the URL of your sitemap to help crawlers navigate.

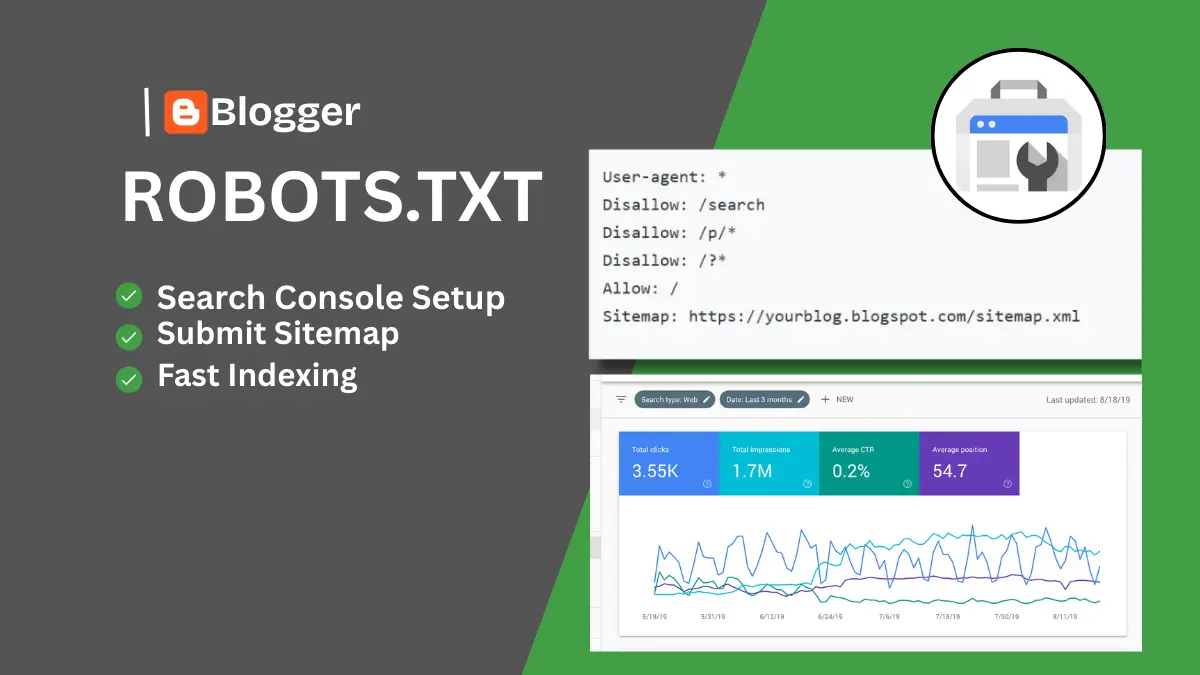

A sample custom robots.txt for Blogger could be this:

Custom Robots.txt for Blogger

User-agent: *

Disallow: /search

Disallow: /p/*

Disallow: /?*

Allow: /

Sitemap: https://yourblog.blogspot.com/sitemap.xml

Explanation:

- Disallow: /search: Blocks search pages because they can create duplicate content.

- Disallow: /p/*: Blocks static pages that are not necessary.

- Sitemap: Provides the sitemap link of your blog.

- Step 5: Update Robots.txt in Blogger

- To edit robots.txt in Blogger dashboard:

- Open Blogger dashboard.

- Go to Settings > Crawlers and Indexing > Custom robots.txt.

- Select the Enable custom robots.txt option.

- Paste your generated robots.txt code.

- Save.

Step 6: Test it

After updating the Robots.txt file, it is important to test it. Use Google Search Console’s Robots.txt Tester tool to see if your rules are working properly or not. Custom Robots.txt Generator for Blogger

How to Optimize Robots.txt for Google Discover?

- Google Discover is a powerful platform that promotes high-quality, engaging content. Follow these tips to optimize Robots.txt for Discover:

- Allow high-quality content pages: Ensure that your blog posts and evergreen content are crawlable.

- Block duplicate content: Disallow archive pages, tags, and labels that create duplicate content.

- Keep sitemap updated: An updated sitemap helps crawlers find fresh content.

- Mobile-friendly design: Blogger themes should be mobile-friendly because Discover targets mobile users.

Common Mistakes to Avoid

- Don’t make these mistakes while creating Robots.txt:

- Blocking the entire blog: Do not write Disallow: / otherwise Google will not crawl your site.

- Forgetting the Sitemap: It is important to add the Sitemap URL.

- Wrong syntax: Even a small mistake (like an extra space) can corrupt the file.

Bonus Tip: Regular Monitoring

Robots.txt is not a “set it and forget it” thing. Check from time to time whether it is working properly or not. Find out from Google Search Console and analytics tools which pages are getting crawled and which are not. Custom Robots.txt Generator for Blogger

Conclusion

Custom Robots.txt Generator for Blogger – Using a custom robots.txt generator for Blogger can be a game-changer. It not only optimizes your blog for search engines, but also increases visibility on platforms like Google Discover. Just follow simple steps: define your goals, generate robots.txt from a trusted tool, and update in the Blogger dashboard. With a little hard work, you can take your blog to the next level!

If you have any doubts or need more tips, ask in the comments! And yes, don’t forget to share your blog – let’s see how creative someone is!

I am a resident of Maharashtra. And I like to write social media, automobile and news related content. I have been writing content for the last 1 year. All my articles are in simple and straight language.